New report finds data sharing with federal immigration agencies might violate Washington law

Listen

(Runtime 1:11)

Read

Immigrant-rights advocates are pointing to new findings by the University of Washington Center for Human Rights, raising concerns of how surveillance technology is used in Washington state.

The report argues that sharing of license plate data violates the state’s Keep Washington Working Act.

The University of Washington Center for Human Rights analyzed data on the use of automated license plate readers (ALPRs) by state and local law enforcement agencies obtained through public records requests. The report found these agencies share this data with Immigration and Customs Enforcement and Customs and Border Patrol.

Maru Mora-Villalpando, from immigrant detention abolitionist group La Resistencia, shared in a webinar that her organization is discussing collective action against further expansion of surveillance technologies.

“We hope the release of this report serves as a starting place for our communities to educate themselves on the situation we find ourselves in,” Mora-Villalpando said.

Mora-Villapando shared in the webinar that communities of color and undocumented immigrants, as well as documented non-citizens, are targets for this kind of surveillance. Those inequities in artificial intelligence use are the concern of many as AI technology rapidly expands.

Angelina Godoy, director of the Center for Human Rights, explained in the webinar that the data captured by ALPRs is not in itself considered to be a privacy violation, as anyone can see and record someone’s license plate. ALPRs can be used by anyone, not just law enforcement agencies, since this information is considered public.

The concern, Godoy said, is aggregating this data with other data in order to track someone’s movements through space and time.

“We have these local law enforcement and private parties setting up these cameras that capture the images of every license plate that drives by a given point, or that are operating a camera that itself is mobile and gathering images of license plates,” Godoy said. “And so that really enables you to track people.”

Godoy said this data could be used for legitimate reasons, but the Center for Human Rights is concerned the data could be used to track migrants in Washington, which is a sanctuary state that does not enforce federal immigration statutes.

The Keep Washington Working Act codified into law that it is not the job of state and local law enforcement agencies to enforce federal immigration law. The report argues that by sharing ALPR data with US Immigration and Customs Enforcement (ICE) and Customs and Border Protection (CBP) that can be used to track people’s movement, state and local law enforcement are violating the spirit of the law by aiding these federal agencies in their enforcement of immigration law.

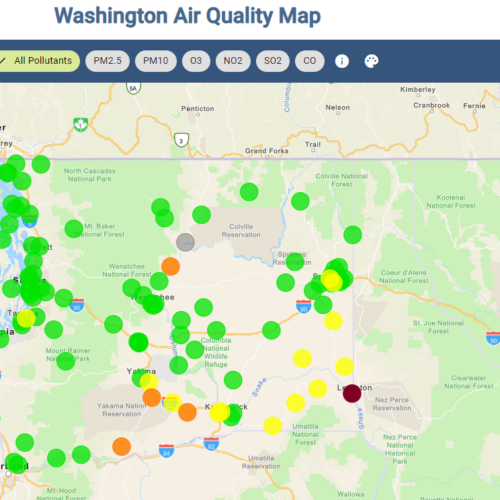

“Many local jurisdictions in Washington State operate ALPR and share the data that their cameras are gathering with either ICE or CBP, or private databases that ICE and CBP have subscriptions to and therefore can access,” Godoy said.

For example, in Okanogan County, the report found that ICE is able to directly access the ALPR software in real time to see what local law enforcement officers are tracking.

“That’s not the job of local law enforcement,” Godoy said “And we want to make sure local law enforcement is here to protect the safety of all Washingtonians.”

At the time of publication, the Okanogan County sheriff could not be reached for comment.

In other circumstances, federal immigration enforcement officers would have to issue a warrant to use GPS tracking devices that would provide similar information.

The report states that private companies, like LexisNexis Risk Solutions, share ALPR data with law enforcement agencies, which the report states allows agencies to sidestep the warrant process.

LexisNexis denied that they purchase or license that data, in an email from a LexisNexis spokesperson. The spokesperson declined an interview and stated they could not discuss the company’s contract with the Department of Homeland Security (DHS), which oversees ICE, but that its $3.2 million per year contract does not include ALPR data.

On the LexisNexis webpage for frequently asked questions about its contract with DHS, it states that LexisNexis, “does not provide the Department of Homeland Security or US Immigration and Customs Enforcement with license plate images or facial recognition capabilities.” The website states that LexisNexis provides DHS with a tool to manage information during law enforcement investigations.

A lawsuit filed in August by immigration advocates in Cook County, Illinois, claimed LexisNexis product, Accurint, sells data that is not publicly available to law enforcement agencies, such as ICE, including ALPR databases.

In 2019, the American Civil Liberties Union released a report finding ICE uses driver data from local police for deportations, including in sanctuary cities.

UW’s Center for Human Rights Director, Godoy, said by sharing ALPR data with immigration enforcement the technology and actions of local law enforcement agencies are being used to carry out immigration enforcement.

“That’s what the spirit of the law prohibits,” Godoy said. “That’s why we’re concerned.”

Whether the technology is bad or is being misused is not the question — but instead, that there is a lack of oversight, she said

“It’s the total lack of regulation of the use of the technology that’s particularly worrisome,” Godoy said.

Jen Lee, from the ACLU, offered ways for Washington to regulate the use of the technology to ensure it does not get used to enforce federal immigration law. Currently, there is no statewide law regulating ALPRs in Washington.

Lee shared a number of policies to regulate the use of the technology, including that there should be restrictions on data access and how long the data is retained. Lee said law enforcement should discard ALPR data that doesn’t match a hotlist within hours or days.

Another ACLU policy recommendation is that data should only be disclosed to a third party if that third party also conforms to restrictions on access and retention, and that there must be transparency about who accesses the data and for what purpose.

“An ACLU investigation found that more than 80 local police departments had set up their [sic] settings to share ALPR data with US Immigration and Customs Enforcement or ICE, even though the practice may violate local privacy laws, or sanctuary policies,” Lee said. “These sorts of really important local laws and policies are not going to have the intended effect if they don’t actually address automated data sharing.”

Lee shared examples of how using ALPR data for law enforcement goes wrong, such as when a Black family in Colorado was detained and handcuffed face down on the ground after ALPR technology mistook their car for a stolen motorcycle. Advocates fear that using automated systems like these could perpetuate systemic biases.

“Our stance is that any surveillance technology is going to exacerbate existing structural inequities,” Lee said.

The White House recently published a blueprint for an AI Bill of Rights, which is meant to give humans rights they should have with AI. The blueprint identifies five principles to guide the design, use and deployment of automated systems, including assurances of safety and effectiveness in the use and storing of data gathered by AI. Yet, the blueprint does not address the use of AI by federal law enforcement.

While Lee said there are no pieces of legislation that specifically address ALPRs proposed for the upcoming legislative session, there are bills being introduced that would have an impact on how ALPR technology is used.

Senate Bill 5116 seeks to account for what automated decision-making tools public entities use, to ensure that those systems don’t have biased impacts. The bill has been introduced in the past two legislative sessions.

Automated decision systems can be used as screening tools in hiring processes, property valuation and for predictive analysis of child welfare and safety. Sen. Bob Hasegawa of the 11th district, said this can have unintended, disparate impacts because biases might be written into the technology.

Hasegawa said he’s hoping this legislation will legally accomplish transparency around what automated decision systems government agencies use.

He said the next step would be to have independent auditors review automated decision systems with the highest risk for biases.

Law enforcement agencies should be held even more accountable for any biases that come from their use of these systems, Hasegawa added, because of the high consequences those decisions can have.

“Anytime you stereotype somebody or break people down into bits that are crunchable by a computer, I think there’s always a danger of disparate impacts,” Hasegawa said.